Make your site irresistible to visitors and Google

- Local SEO

- Customer Focused

- Great Support

We are Gold Coast SEO experts servicing locals and beyond. Our approach uses our 25+ years of web development to your advantage.

High value SEO services is our specialty - when you work directly with a skilled developer your site responds much more swiftly without a bloated price tag. Nobody likes a slow website.

We consistently achieve successful results for long-term clients who pay only $440 per month and stay at the top of searches on competitive keywords. Other clients prefer to invest more because it is worth it. There are a few of our seo and user interface design ideas below so see if you agree with our concepts.

Highlight Text using #:~:text=

How long does SEO take to work?

Keyword Find a specific keyword in a site

Page and SERP Titles do not Match

Adwords is where you pay Google to place your listing at the top or side of the search results. refer to Organic result

Check our article on SEO vs Adwords for info on the difference between SEO and Adwords.

Adwords is a bidding mechanism where the price you pay for a click varies depending on competition.

AI is essentially using data to make informed decisions based on that data. It is not robots gone mad!

Hence Google has an immense amount of data to base decisions like related content and the context of a query. Approximately 1 in 10 queries typed into Google are spelt incorrectly.

Google uses AI to analyse the quality of content.

Search Engine Journal claim (March 6th 2025) AI Overviews (AIOs) appear in 42.5% of search results.

This leads to declining click-through rates especially for informational queries as searchers are having the query answered without leaving the search page ie Google.

The counter argument to this is there are more searches due to the information being so readily available. There are larger volumes of searches than ever. Hence SEO is now more nuanced. And given SEOs have adapted to algorithm changes - they may still be the best people to help gain web traffic.

An Anchor is the test used in a link.

In the above example the Anchor is Google.

Some SEOs vary their Anchors due to Google penalising the over-use of exact match Anchors.

It is still a good idea to use some exact match anchor texts. However, in general, you want to mix it up with other types of anchor texts.

The actual link structure is below.

<a href="www.example.com" alt="description about your Anchor page">Anchor Text</a>

"Some queries (called anonymized queries) are not included in Search Console data to protect the privacy of the user making the query.

Anonymized queries are those that aren't issued by more than a few dozen users over a two-to-three month period. To protect privacy, the actual queries won't be shown in the Search performance data"

Ahrefs have a new report (2025) that can uncover "Anonumous Queries". About 46% of all clicks in GSC come from queries marked as "anonymous."

![]() If you thought that anonymous queries were irrelevant queries - its not the case on the clients I have checked. From an SEO perspective keywords clicked is very important because you can discover the intent of the users visiting the site. In some cases 87% of queries were anonymized in GSC.

If you thought that anonymous queries were irrelevant queries - its not the case on the clients I have checked. From an SEO perspective keywords clicked is very important because you can discover the intent of the users visiting the site. In some cases 87% of queries were anonymized in GSC.

Backlinks are simply inbound links into your website from other sites.

Links are the "bread and butter" of SEO. Not all links are equal. A link from a quality site with a good reputation on a specific topic is worth far more than a spammy link on a forum.

Update Nov 2022

Google announce Links may be less important to the Google Search ranking algorithm in the future

Concentrating on quality content is always worth the investment. SEOs relying on paid link schemes will suffer.

Google Analytics describe bounce rate as "Bounce Rate is the percentage of single-page visits (i.e. visits in which the person left your site from the entrance page)." In Google Analytics 4 it is described as "The percentage of single-page sessions in which there was no interaction with the page." With the new event model they measure scrolls and other events.

A "bounce" occurs when a user lands on a page and then leaves without any other interaction. In some cases this is not a problem, you have a timetable page, the user has the necessary information and then leaves.

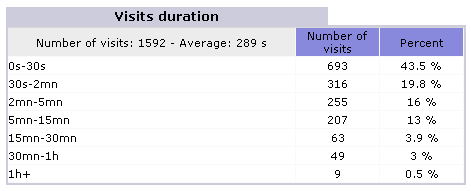

![]() Sometimes this information is presented differently, for example some stats packages will give you a visitor duration figure that can be a form of bounce rate.

Sometimes this information is presented differently, for example some stats packages will give you a visitor duration figure that can be a form of bounce rate.

Hence in the above stats 43.5% of visitors left within 30 seconds.

These figures are always relative and numbers should vary depending on the type of site.

For example if a site is getting a broad set of hits on keywords the bounce rate will be higher - but you are still getting more visitors with good SEO.

A high bounce rate isn't necessarily a bad thing, it depends on the context of the page. It is probably not a feature of the Google Algorithm. It is a feature of an SEO campaign. We want to see pages designed to attract visitors and keep them on the site.

Firefox, Internet Explorer, Safari and other browsers are used to display web pages on computers and smart devices.

Firefox, Internet Explorer, Safari and other browsers are used to display web pages on computers and smart devices.

Cannibalization is an SEO issue that occurs when multiple pages on a site target the same keyword(s) and appear to serve the same purpose. This can harm each page's search engine rankings as Google has to choose which page to rank highest.

Some sites have pages that are very similar to others with duplicate content. Search engines allocate a crawl budget for each site. According to Google, duplicate content can waste this crawl budget.

refer Crawled by Google

A User Interface Design concept chunking relates to breaking information up into bite size chunks so it can be read easily - much like a newspaper. Arthur Miller asserted that humans can absorb +-5 pieces of information, chunking allows information to be placed in digestible batches.

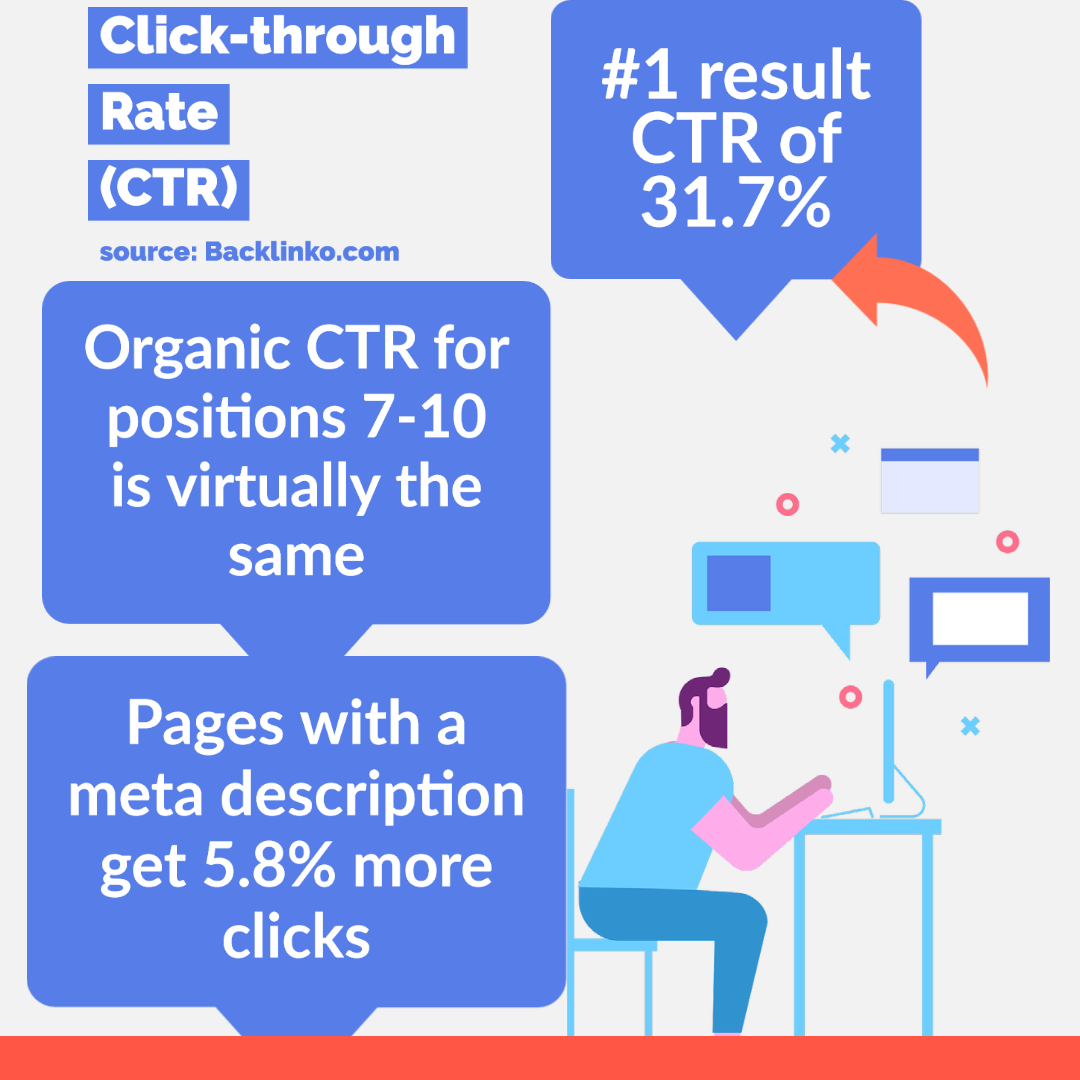

The proportion of visitors to a web page who follow a hypertext link to a particular site.

Backlinko.com published an article on CTR based on 5 million Google search results-:

Having a site on the first page of Google is important. And if you are not in the top 1-3 there is still some value (even towards the bottom of the page).

The meta description is sometimes used by Google after the title of the page in their SERP Results. If there is no meta description Google will pull information from your page that it considers relevant.

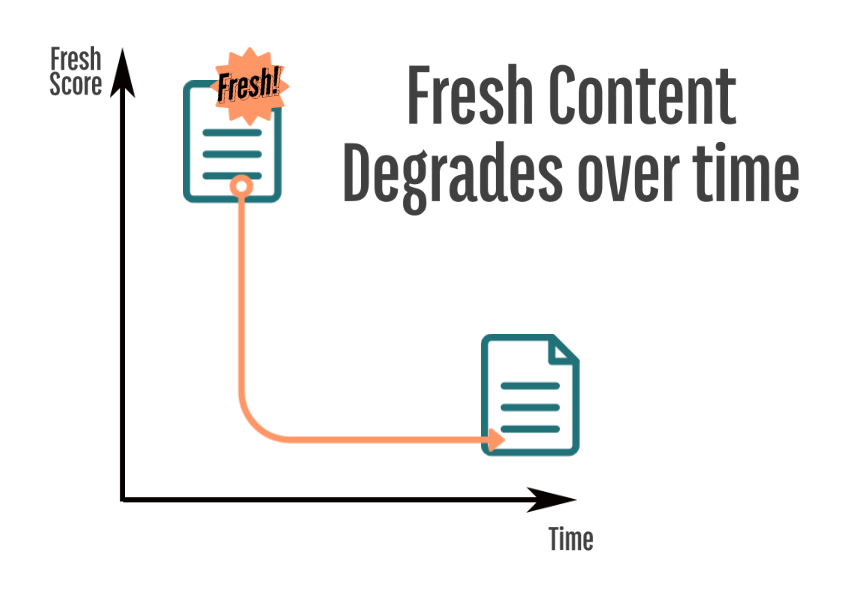

Fresh content ranks better. How often to refresh content or refresh the site depends on the context.

Good SEO Content includes-:

You create an informative article with case studies, examples, rich media and statistics. Your well written and edited article is designed to be both entertaining and actionable. It is so exciting to see that article do well in Google.

Over time you may notice the article drop in rankings. This is termed "Content Decay". A knowledgeable SEO will monitor the article and keep aware of changes and improvements that can add value. We like to add a YouTube video, a table, a unique image to keep articles fresh. With good SEO software you can monitor the ranking and the page that ranks. The occasional link and promotion on social media also helps. A good blog always starts with a good idea.

Hence where your blog/article resides is very important. If it resides on a site you do not have access to because it was a "guest post" or worse still a paid link you may not have access to update that article.

Revisiting and updating articles is part of a good SEO strategy. Building a system around updating content makes sense.

Below is an example of a topical issue in Australia "ACCC and News Corp vs Google and Facebook". What is amazing is a site like this (goldcoastlogin.com.au) can rank on the first page against the might of news media sites on a variety of terms including "newscorp vs google" and below-:

Conversion rate is the rate of sales or effective contacts per visitor. Hence a well designed site may convert 1 in 20 visitors and a poor or more diverse site may convert 1 in 200 visitors.

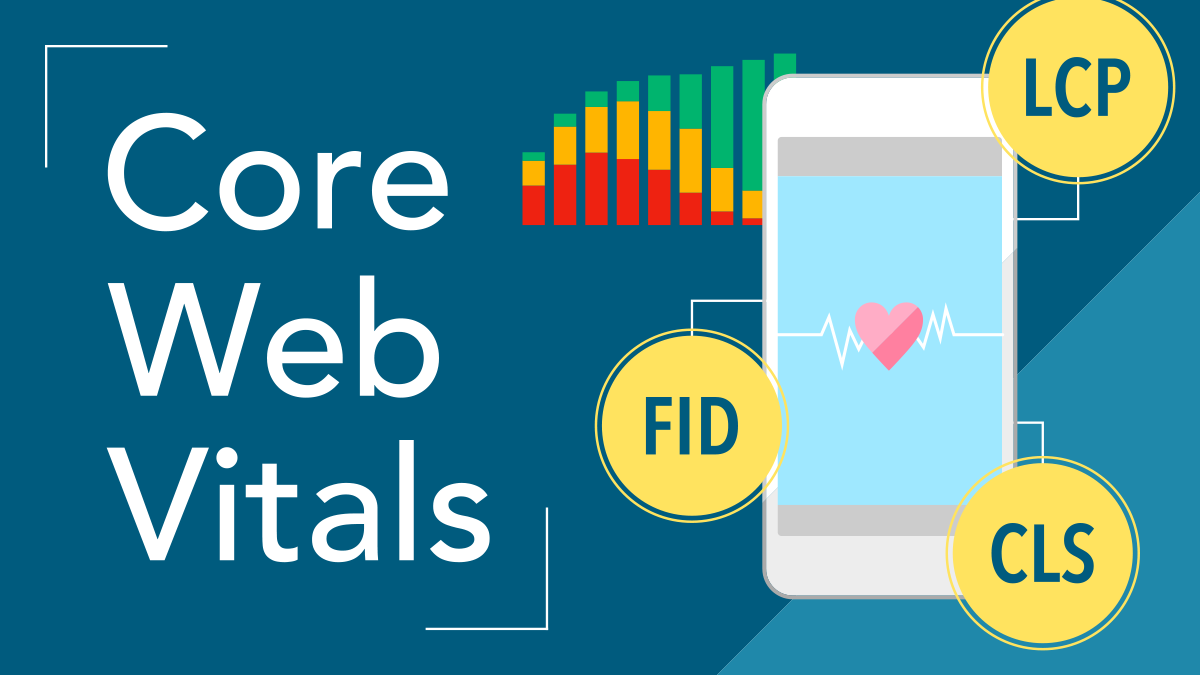

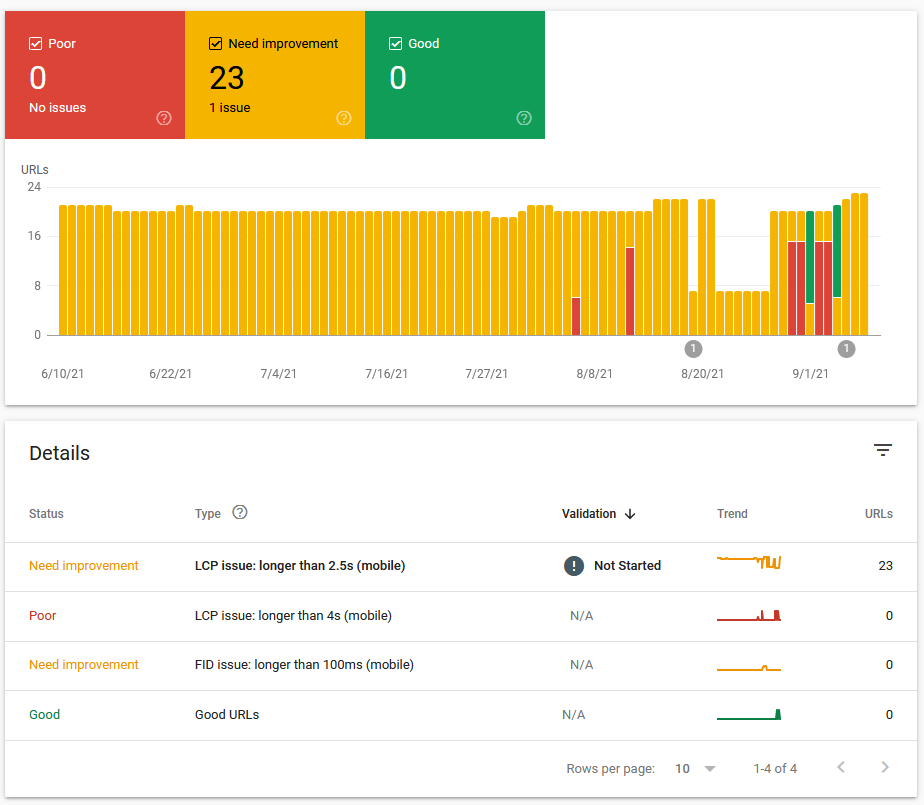

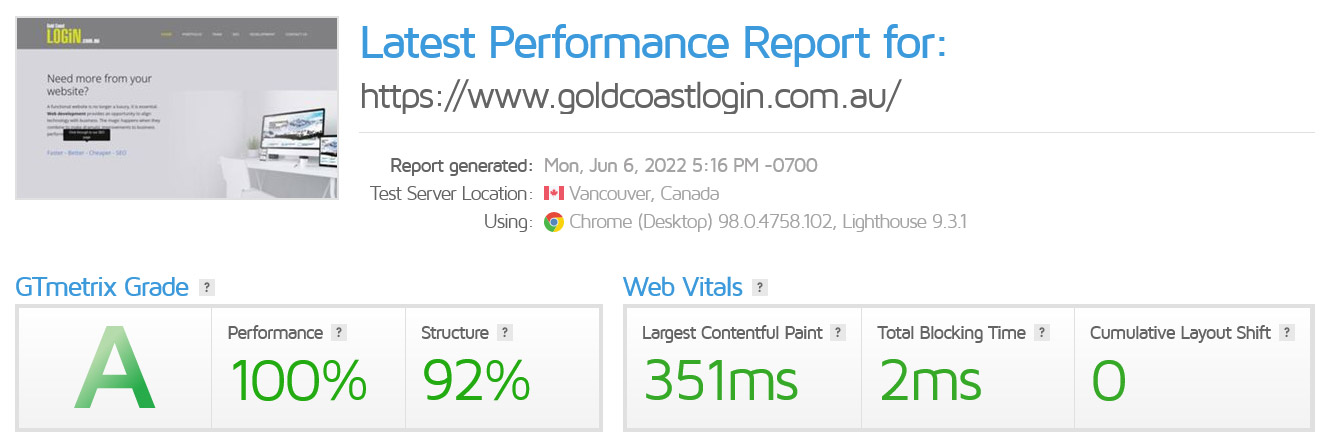

"A set of metrics related to speed, responsiveness and visual stability, to help site owners measure user experience on the web."

Core Web Vitals can be viewed in Google Console. They are good for SEO as they can give your site more recognition and will penalise poorly structured sites.

The idea is to have core user experience measures (Core Web Vitals) for pages. Actually it is more about groups of pages and gives insight into how Google classifies your site.

The Largest Contentful Paint (LCP) metric reports the render time of the largest image or text block visible within the viewport, relative to when the page first started loading.

First Input Delay measures the time from when a user first interacts with a page (i.e. when they click a link, tap on a button, or use a custom, JavaScript-powered control) to the time when the browser is actually able to begin processing event handlers in response to that interaction.

Unexpected movement of page content usually happens because resources are loaded asynchronously or DOM elements get dynamically added to the page above existing content. Cumulative Layout Shift calculates how often this is happening for real users. Losing your place whilst reading an article is annoying and often happens in news sites where ads and other content are loaded.

| Good | Needs improvement | Poor | |

|---|---|---|---|

| LCP | <=2.5s | <=4s | >4s |

| FID | <=100ms | <=300ms | >300ms |

| CLS | <=0.1 | <=0.25 | >0.25 |

To index pages search engines crawl your page. If your page is updated Google will eventually crawl the page and index the new information.

1 Use Google Console to check

2 type in Google site:yoursitename.com see pages indexed

site:yoursitename.com

Google regularly visiting your page and crawling is beneficial. Developing a strategy for Google to return is important.

Crawlers (or bots) typically follow these steps:

1Fetch the HTML of a webpage.

2Parse links and content from the HTML.

3Follow internal and external links to discover more pages.

4Index the content (if it's a search engine or AI model).

sites are increasingly being crawled by Ai bots - one distinction between Ai bots and search engines like Google is that Google will process Javascript - most Ai bots do not. This is significant if your site uses sophisticated JavaScript to render pages instead of server-side processing. Server-side processing renders pages as HTML.

Every resource on a webpage carries an overhead.

Limiting the number of requests is very important.

Look at the following (from Google Chrome developer tools) just to indicate the extra information every resource in a page carries-:

Google when crawling a site checks if a resource has been modified either using Etag or Last-Modified.

Last-Modified is sent from the server - it is the date in GMT that the file was last updated. It will match the server settings for that file.

This should match your sitemap.xml <lastmod>2025-07-15T13:00+10:00</lastmod> tag.

The Expires header in an HTTP response (above) specifies a date and time after which a resource in the browser's cache is considered stale and should be re-requested from the server. This is specifically is set by the web developer. How it is set varies on the platform used.

A good combination of a sitemap.xml, structured data and meta tags and fast loading efficient web pages can make it easier for Google to Crawl your website.

Data-driven content is using your company's data in your content. Journalists are more likely to link to or mention your content if it is based on solid data.

Example: 20% of purchasors of product x mentioned feature y as the key reason for their purchase.

Corelogic are a company that have built a reputation on providing data on the changing value of housing.

A Deep Linking SEO Strategy relies upon high quality content linking to other high quality content rather than to the home page or about us page.

The emphasis must be on quality content and related to other valuable content.

Based on the width of the device, your ideal meta description tag can be between 160 and 320 characters long (920 -1,840 pixels). These descriptions can really help garner clicks. Adwords experts are very good a writing enticing descriptions to get you to click on a paid ad. SEO's also pay attention to this section. However depending on the query Google may adapt snippets of the page instead of running the actual description you enter in this meta tag in the header of a page. The Facebook OG:Description tag allows for 300 characters. So designing blogs with a 300 character limit makes sense.

A Domain Metric is a rating or score from third party SEO software like Moz DA (Domain Authority) PA (Page Authority) or Ahrefs DR (Domain Rank). Domain-based metrics are useful for website owners to see a trend for their own domains overall. A domain-metric can NEVER give you an indication of what quality a certain link will have. We often see a page rise above its predicted position by these tools. Some tools can be wrong in predictions by a factor of 10. So if someone claims you are only getting x amount of traffic compared to another site - unless they are Google themselves or have access to your stats they do not really know.

Google rank a page not necessarily based on the power of the domain - we know this because it is what they say and we can see clear examples.

Domain names eg www.GoldCoastLogin.com.au are essentially server addresses that point to where your website is located. .com.au domains have specific rules governed in Australia.

Managing your domain name and its renewal is very important. The domain name should always be in the business entities name (not your web developers).

Currently there appears to be little reason to have numerous domain name unless you wish to not allow those names to be available to others.

Refer to Managing your domains a quick guide where you can read about some of the horror stories my clients have experienced with domain names resellers.

In 2019 Google announced it would penalise low-quality exact match domains (EMD penalty). This algorithm change targets "Exact-match" domains with low-quality content.

So if someone offers you a domain name that is a match for your keywords don't bother purchasing it unless you are going to really work on that domain.

The key to success in Google is quality content, spammy techniques like "Exact-match" domains and Paid Links can lead to penalties.

If you have a domain name with keywords in it, you can still rank very well, your content needs to be of high quality.

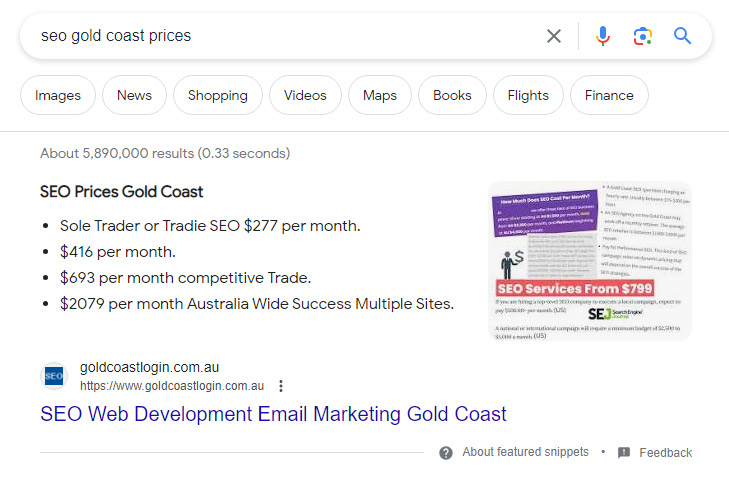

"We display featured snippets when our systems determine this format will help people more easily discover what they're seeking"

Featured Snippets are the ultimate for an SEO. They are usually an enhanced listing at the very top of search results. The coveted #1 position.

Having well formatted HTML code the follows Principles of Interaction Design with concise answers to specific queries helps.

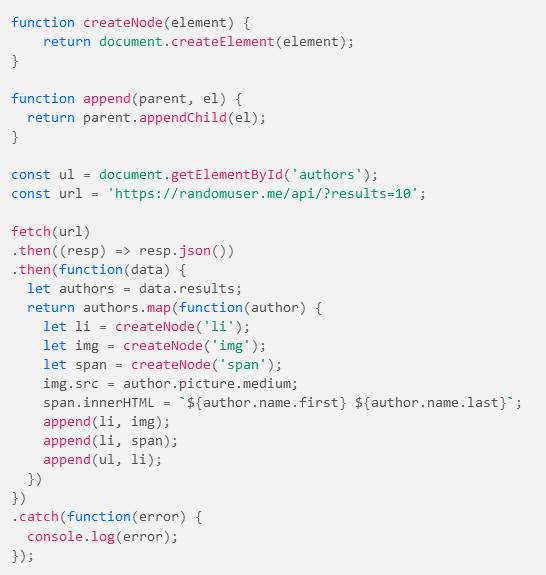

The web functions partly on APIs that contain information (data) and can be accessed. This is often reached by a JavaScript Fetch query. These have evolved and are not just useful for large Tech Giants. We can use them to add SEO value to a page. By developing consistent systems with re-usable components we can "Fetch" data from any site and display on another related site. Then as the image above shows create code to format that information into the style of the page.

Fetch queries are crawled by Google. We test this by using Google Console-:

1 From Google Console inspect the Url.

2 Click the "page is mobile friendly".

3 Click view tested page.

4 Check HTML to see what Google is actually crawling.

Certain fraudsters will attempt to gain access to your database. Often they bombard certain pages that use parameters to query a database. They tack on SQL code to try to find the database names, tables fields etc.

We catch the IP address and check with scamalytics.com to see if they are a known scammer.

scamalytics.com give an assessment of the ISP you use, this is useful to know because the last issue you want is to be associated with a fraudulent ISP.

If you find your server running out of database connections or other intermittent issues, check the Server Logs for persistent hacking attempts from a specific IP Address.

You can also check the location of the fraudsters by going to tools.keycdn.com/geo and keying in the offending IP Address.

Freshness of content is increasingly important to Google, however this does depend on categories of queries that should be fresh. Hence just changing your site every day is not necessarily going to work here. Its important to have a site that can change easily and in a way that improves SEO value.

QDF refers to a query that deserves freshness. So Google will enhance sites with fresh content if the query deserves freshness.

A full-stack web developer is proficient in both front-end (client-side) and back-end (server-side) development. This means they can handle the whole development process, from designing the user interface to managing databases and server configurations.

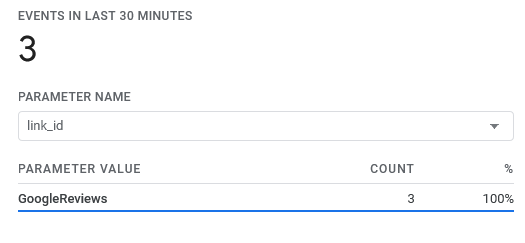

With the introduction of Google Analytics 4 there is a transition to event-based data model.

"Google Analytics 4 is our next-generation measurement solution, and is replacing Universal Analytics. On July 1, 2023, Universal Analytics properties will stop processing new hits."

More control and access to data on your website allows you to measure and discover better, understand your online visitors and create optimal experiences for them.

You place scripts onto your page that track user behaviour. The new event-based data model allows you to manage and report better.

With Google Analytics 4 event-based model you can track an external link on a page. In the example below we added an ID to a link which allows us to track how often that link is clicked. Tracking the number of clicks to an external reviews page is reported as per below.

1 Add an id to a specific link (event) you wish to identify (GoogleReviews)

2 Engagement>Events>click>PARAMETER NAME>link_id

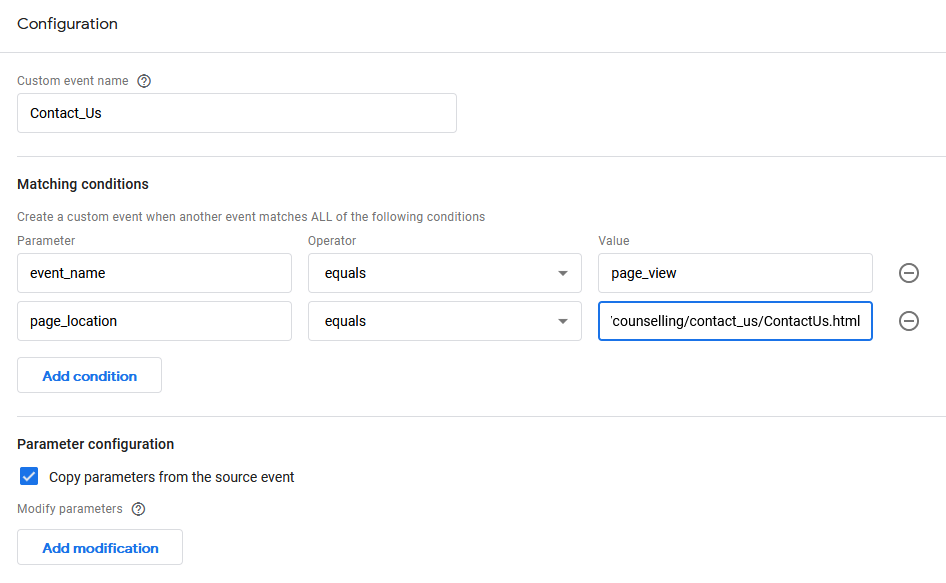

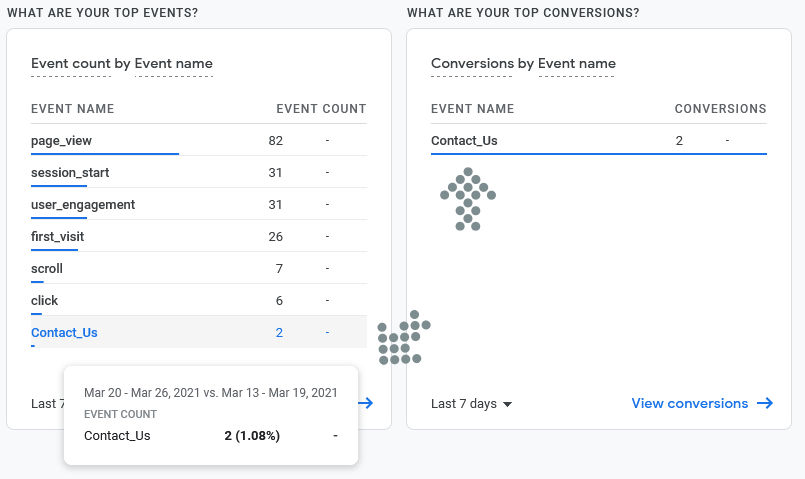

We created a Custom Event called Contact_Us which fires when our specific page is loaded. You can add logical conditions. You may choose to test a landing page and see if it is effective. You can mark it as a conversion meaning you can quickly check your specific conversions for a site with the reporting systems in Google Analytics 4. Anyone with web programming experience in virtually any language will recognise the naming conventions used and differentiate between a default event and one you have specifically named. By using obvious and consistent logic an SEO can work on multiple sites fluently. You do need to invest time wisely to ensure you get the most value.

These are simple examples, however advanced reports may want to track details of product sales in detail.

Using Google Analytics for SEO

Google console reports valuable information on Google searches. Click and impression information helps analyse pages that are working well or could work better.

A study by ahrefs (2023 SEO statistics) indicated "46.08% of clicks in Google Search Console go to hidden terms" meaning you see many of the search terms used to get to your site but not all.

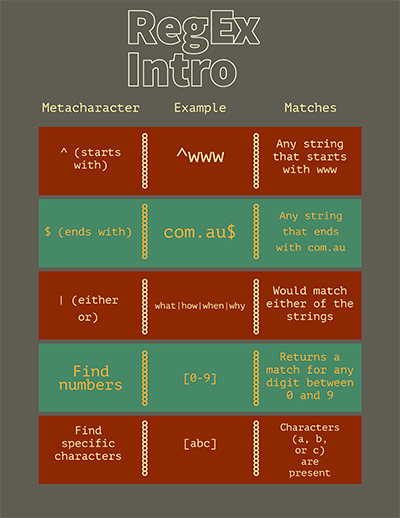

You can now apply regular expressions (regex) to Google Console performance stats:

This regular expression might show results that indicate your content should easily answer questions. You can use it to create advanced filters to include or exclude more than just a word or a phrase.

RegEx for SEOA headless browser is a web browser without a graphical user interface (GUI).

An example is Headless Chrome: a specialised mode of the Google Chrome browser that operates without a GUI.

The text #:~:text= is used in URLs to link directly to a specific piece of text on a webpage, highlighting it when the page is opened - an example-:

https://www.goldcoastlogin.com.au/optimising-for-brand-seo#:~:text=Apple AirPods sale

Say you have a specific part of a page you would like your visitors to go to (for me it is often reminding clients to refresh the browser to see an updated page). The highlight text link can be used to send them to the specific section you are referring to. It is a great policy to have details in your website - and not a great policy to insist people wade through a page to find it. Using the ability of most browsers to hightlight text helps here. So I can send an email with the following link-:

https://www.goldcoastlogin.com.au/gold-coast/news/NewsArticle.jsp?News_ID=143#:~:text=Refresh your browser to remove the old cached version

One theory explaining the time lag for SEO to start to work is to keep the "cause and effect" obscure. Making SEO difficult would help Google generate ad revenue.

Often we see an article take 2 months or more to rank well, even on an established well-ranking site. If the content is deemed "news" or something that is "timely" it can rank straight away, but often will drop off quickly as it is no longer "timely".

This is guess work. Except if you write enough articles that eventually rank well, following a proven formula helps. There is no one and only formula for SEO. There are no exact rules for article length or format.

An SEO needs a good memory. The concept of freshness and content decay is slightly different. One obvious reason freshness is an issue is to work against SEO's who use dodgy guest posts.

The question of if your site gets to the top how long will it continue to rank? Surprisingly, this can actually be years.

Some websites will get to the top with SEO and then stay there without any paid SEO. And sometimes for years, even in competitive keywords. We rarely see a website get to the top without some quality behind it. But in terms of staying there, it is possible with a no or miniscule budget. Again one theory for this is to make it difficult for new entratnts. The only alternative being to pay for Google ads.

HTML is the code that sits on the server and when opened in a Browser displays the page online. Almost every page's HTML can be viewed on your browser i.e. you can actually view the code written by the developer.

An Impression in SEO speak is when your page is displayed in an organic search result.

Google records this information for every search made. This information is very useful because a SEO can discover new opportunities.

Impressions are crucial for SEO because they measure how frequently your website or content appears in search results. You can view your impression statistics via Google Search Console.

A good strategy for some sites is to broaden the Impressions as much as possible. Cast a wide net. This can be useful when there are only limited specific terms to choose and rank on.

Internal links are the links and anchor text that go internally on your site, they point to pages on your own site. There can be some issues where Google needs to choose which page refers to which topic (keyword). You can effectively cannibalise your site by having pages compete. There is nothing wrong with having two pages listed, which can often be the home page and the main page for that keyword.

Google does take Internal Links into account when ranking your site.

An IP Address is a unique string of numbers separated by full stops that identifies each computer using the Internet Protocol to communicate over a network.

The IP Address of your site and the IP Addresses of links to your site are factors Google takes into consideration. If most of the links to your site are only from one IP Address it will not be as powerful as multiple IP Addresses. Also if you are a local business on the Gold Coast and you have a string of links coming from one specific destination in a foreign country Google will likely regard those links as less valuable or even outright spam.

Keywords are the words you target in Google for your site. Hence if I wanted to come up highly in Google on SEO Gold Coast - those words would be called my Keywords.

Keywords are the words you target in Google for your site. Hence if I wanted to come up highly in Google on SEO Gold Coast - those words would be called my Keywords.

Google Analytics has a field called Keyword under the menu Traffic Sources> Search> Organic

Other stats packages may call these Search Key phrases

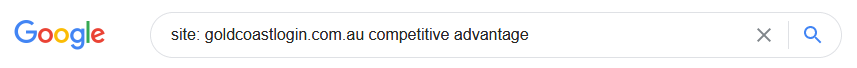

Often you may be looking for a page on your own site that has a certain term. You can search each page individually with a browser search function. To search an entire site for a specific keyword, check the example below it searches for the keyword "competitive advantage" and comes up with the best matches on this entire site. site: goldcoastlogin.com.au competitive advantage

Say you have written a really good blog on "competitive advantage" and need to find the best pages with related content within a certain site - this search is for you.

Keyword stuffing is where website text over uses keywords in the vain hope of the site improving on those keywords. Google can penalise sites it considers is keyword stuffing. By penalise we really mean not promote.

Some SEO tools emphasise a certain number of keywords in a post or page, some also specify a certain length. More importance should be placed on updating articles, formatting them effectively and using good unique images. Google is very adept at picking unique quality writing.

There is no precise formula for SEO success - take a look around and notice all sorts of sites, many that do not even validate but they still are listed in search engines.

A Google leak revealed Google stores several metrics around "Last Significant Update". How Google determines if updates to a page are significant is speculation.

We were aware how often a page is crawled and how this corelates to high ranking pages and especially product pages. It makes sense to rank a page that is often updated with quality information. Developing systems to update pages quickly also makes sense. As an SEO you want familiar systems to work with, they can be very quick to update.

Lazy loading is a strategy to identify resources as non-blocking (non-critical) and load these only when needed. Often this is applied to images that are not in the screen view. this dramatically reduces the loading time for some pages.

A link or hyperlink or backlink is how one page comes up when something is clicked on another page. They are coded into the site. Links are a valuable commodity for advancing a site in Google. Learning how to create quality links pointing to your site is a very important SEO factor. It remains the most important factor in improving SEO rankings.

![]() A verified statistic is 55.24% of web pages don't have backlinks - meaning there are many poor websites out there.

A verified statistic is 55.24% of web pages don't have backlinks - meaning there are many poor websites out there.

Here is a definitive article on rules for links. (its over 6000 words) however you may appreciate your SEO more if you can confirm they understand these issues.

From Google Webmaster Guidelines:

Any links intended to manipulate PageRank or a site's ranking in Google search results may be considered part of a link scheme and a violation of Google's Webmaster Guidelines. This includes any behaviour that manipulates links to your site or outgoing links from your site.

Links purchased for advertising should be designated as such. refer NO Follow Link

Google's WebMaster Guidelines: "Not all paid links violate our guidelines."

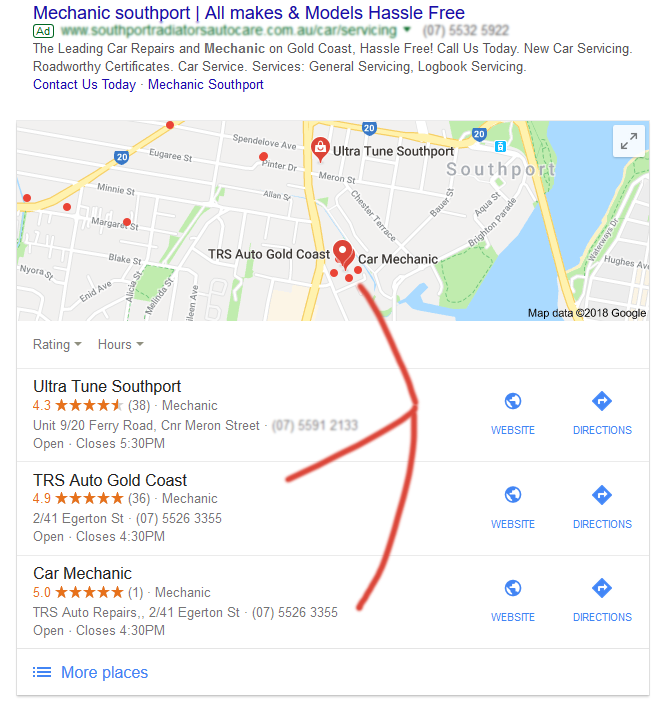

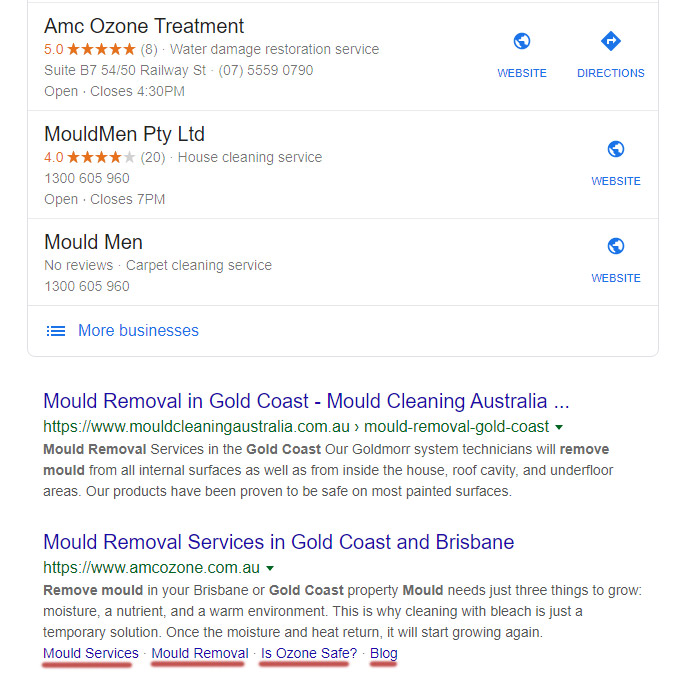

What constitutes a local search is very important to SEOs because being recognised as a local company can give you 2 entries on the first page in a search.

Check our article The anatomy of a local Google search

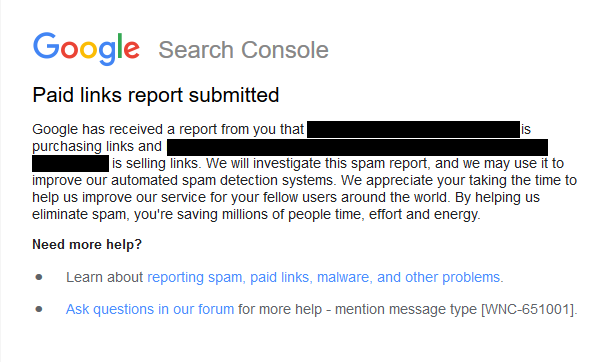

If you are found to have paid links to your site and those links do not include a No Follow or Sponsored annotation you may be penalised by Google.

1 Google will discount the links.

2 Google will penalise you via a human issuing a Manual Action.

A Manual Action will show up in your Google Console Manual Actions report-:

Security & Manual Actions>Manual Actions>

Metadata is simply data about data. In the Head section of a website are some important SEO tags and general tags. Metadata or Meta tags contain all sorts of information useful to search engine robots and even tags used to help display your content in social media well. For example a tag can be used to tell Facebook which image to use for this page. Given that social media sites have different image size preferences this information is useful to having your page shared across various platforms consistently.

Mobile-First Indexing was a change in how Google indexed pages. They switched from using the desktop version of pages to the mobile version. This means that content and links seen on mobile pages are what are ultimately indexed. Hence if you have content that does not display or is hidden in a mobile version of your site, it will not be crawled like it would have on the desktop version.

Owning multiple domains can be a good strategy. It is not as common as say keyword optimisation or other SEO techniques. It favours SEOs who are web developers. Some companies create microsites to capitalise on niches. Some buy sites because they are not efficient at creating sites.

A link or hyperlink that does not pass on PageRank value and indicates to search robots not to follow that link as they crawl the web. These are used in Facebook and Twitter automatically for links. Part of the reason for this is that if these links on popular social media sites did add SEO value it would be chaos with spammers constantly creating fake accounts in an attempt to add value to the sites they link to.

Social media can be used for SEO value but not by adding spammy links to fake posts on non popular sites.

rel="nofollow" is added to a link.

The "noindex" directive tells search engines not to include a specific page in their search results. You can add this instruction in the HTML head section using the robots meta tag or the X-Robots HTTP header.

Use "noindex" when you want to keep a page from showing up in search results but still allow search engines to read the page's content. This is helpful for pages that users can see but that you don't want search engines to display, like thank-you pages or internal search result pages.

<meta name="robots" content="noindex" />

This is a security issue and has no effect on SEO.

rel="noopener" is added to a link.

Ahrefs say "The good news is, since 2020, most browsers automatically process links with target_blank (open in new tab/window) as if rel="noopener" is set on them."

It is SEO completed away from your website that makes the most difference. Up to 75% of the value added to a site is from 'Off-page SEO'. External blogs, backlinks, social media posts and YouTube content are our preferred methods. Some SEO's will submit sites to directories, forums and other strategies. The strategy you use does matter, and the cost of SEO does matter as well.

A protocol to allow webpages to be represented in Social Media. For example Facebook use the Open Graph Protocol to properly represent a webpage shared on its platform. It is one consistent mechanism helping to keep websites as simple as possible. To turn your web pages into graph objects, you need to add basic metadata to your page.

For example og:image is used to tell Facebook which image should be included with your blog post if it is shared.

Organic Search refers to a search result that is not paid for via a system like Adwords. The result is there because Google considers the page to be popular. The art of a good SEO is to get your site ranking on keywords. We use a number of tools for this, plus a lot of observation. For example if we notice a keyword starting to do well, that makes a good candidate for more concentrated efforts. The more search traffic a keyword attracts the more competition you will face.

The HTML Title tag may read like <html>Title of my site</html> and would normally display in the browser tab. Google can change titles and often sees the ones listed on your page as being suggestions. Often it just accepts them and other times it can present other ones depending on a number of factors. Think of titles as being more dynamic rather than fixed.

"Participating in link schemes violates the Googles Webmaster Guidelines and can negatively impact a site's ranking in search results."

"Participating in link schemes violates the Googles Webmaster Guidelines and can negatively impact a site's ranking in search results."1 Use a link profiling tool to analyse the links to a specific website.

2 Note the links on sites that appear unnatural, links that are unlikely to be acquired naturally.

3 A site with massive numbers of foreign links, or on "contrived sites" sites that are not related to the target website, can be a sign of a link scheme or paid links.

If you analysed the strategies of some SEO companies you may not use them. Often thinly disguised link schemes are used to undermine search results. The costs are passed on in higher monthly SEO fees.

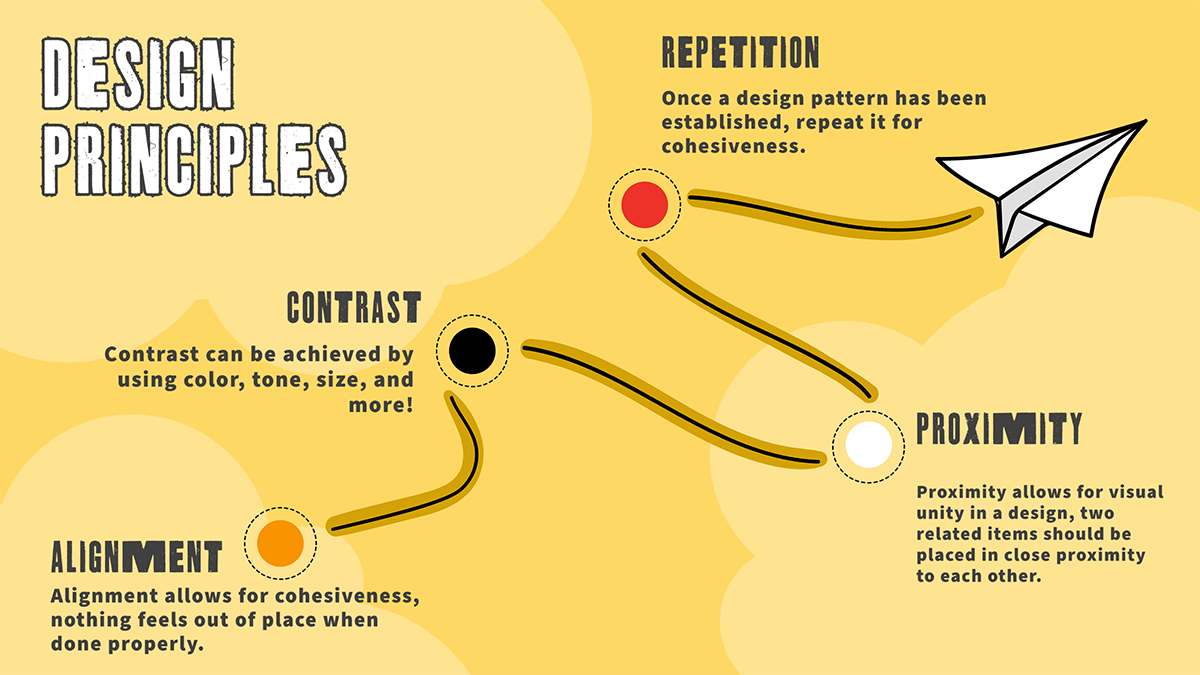

A User Interface Design Concept to apply to design-:

Proximity - associating a design element with another eg text near an image or a caption

Alignment - how an element is aligned for example consistently aligning left

Repetition - following consistent design rules

Contrast - use of contrasting elements to stand out

An example of a site that has been severely penalised in Google for being over optimised.

The SEO has been caught out with dodgy links all with the same anchor text on multiple poor quality domains. Note the blue line is an attempt to remove many of those links. The organic traffic has dropped off to nearly nil.

Even world-renown SEO's have been penalised for over-optimisation. However penalising an SEO is one thing - penalising a site that has been over optimised by an SEO is really putting your clients' future at risk.

A site that is over-optimised may have keyword stuffing and paid links of a poor quality. Some SEOs cannot recover from this scenario because they do not have access to the sites with the paid links.

In August 2022 Google rolled out the Helpful Content Update (HCU) principly aimed at sites designed for search engines and not necessarily people.

The effect on some sites was dramatic-:

Ahrefs used as the software creating the estimates above.

Ahrefs used as the software creating the estimates above.

An example of the drop off in estimated organic clicks from a site affected by the Google Helpful Content Update.

Google cited the following points referring to sites that create content for search engines first-:

Is the content primarily to attract people from search engines, rather than made for humans?

Are you producing lots of content on different topics in hopes that some of it might perform well in search results?

Are you using extensive automation to produce content on many topics?

Are you mainly summarizing what others have to say without adding much value?

Are you writing about things simply because they seem trending and not because you'd write about them otherwise for your existing audience?

Does your content leave readers feeling like they need to search again to get better information from other sources?

Are you writing to a particular word count because you've heard or read that Google has a preferred word count? (No, we don't).

Did you decide to enter some niche topic area without any real expertise, but instead mainly because you thought you'd get search traffic?

Does your content promise to answer a question that actually has no answer, such as suggesting there's a release date for a product, movie, or TV show when one isn't confirmed?

There are a number of factors that affect how your page ranks in Google. This page lists over 200 ranking factors. Ranking factors are not officially published by Google but many are clear and quite obvious. Also Google attempts to give searchers a good user experience so well researched quality content will always rank well. Google can check via various methods whether your site has quality content.

Referrer Spam is when a site makes constant website requests but it is using an incorrect (fake) referrer URL. Hence the analytics of the receiving website reflect the fake URL. It looks as if the spammer linked to the website however it is really an attempt to be seen as a link for search engines and a positive gain for the spammer. However this requires the stats eg AWSTATS to be visible. Its best to keep these hidden via passwords. Google's Penguin anti-spam algorithm update, pretty much eliminated the benefit of this scam.

If a page is updated usually by FTP or by use of a Content Management System you may see the old cached version. To be sure refresh the browser to force the cache to be cleared and show the most recent page. Some browsers use the Shift + Refresh option to force reloading of all resources. Ctrl + f5 is another option on Windows.

A Regular Expression (regex) is a sequence of characters that specifies a search pattern. You can use it to create advanced filters to include or exclude more than just a word or a phrase or a character. Validation techniques use regex to specify certain characters or character patterns in input fields. Server logs can be analysed quickly and regex can also be used for server Unix commands.

The regex ^[ \t]+|[ \t]+$ matches excess whitespace at the beginning or end of a line.

Regex used in scripting and on servers add efficiency. Hence a multi-skilled SEO will have tools to quickly update and change information that is in text format.

Java uses regex File_Name = File_Name.replaceAll("\\W", "-"); replaces a non-word character with a -.

Using a text editor what accepts regular expressions allows you to quickly update tags with an find H2|H3

Not so long ago there was a mad rush for everyone to get mobile versions of their websites. 'Enterprising' companies were ringing people up and perplexing them with reasons as to why this was so important.

It is important to have a site that adjusts to the device it is being displayed on seamlessly. The best technique is to use advanced coding techniques that adjust the display to the screen size. Responsive images are also important - these adjust to the width of the screen. Check our article on responsive website vs a mobile version.

Google uses advanced techniques via its web master tools suite that can analyse how your site performs as a mobile version.

An article on Anatomy of a Local Search - compares search results on a local search term. Understanding how your site can benefit from a local listing via Google My Business. One mechanism within Google My Business is reviews.

For larger websites the robots.txt is essential to give search engines very clear instructions on what content not to access. Search crawlers like Google have to be economical with the pages they crawl. A sitemap can also be used to inform search engines which files need to be indexed. However if a sitemap is out of date, search engines can still index new pages.

Ecommerce sites can display the same information using different parameters. For example you may have a function to sort products of a category in multiple ways. We do not need every one of those pages with the same information (just ordered differently) indexed in Google. Often Google discovers this anyway, but it does not hurt to explicitly tell crawlers not to search certain patterns. Another example may be if you are adding a product to a shopping cart. This may have a certain URL - we can use some RegEx characters to not index pages with a certain character pattern. More efficient crawls decreases resources on your own server as well.

Be wary of blocking scripts and other resources as this may prevent Google from seeing your page as intended because the resource is blocked. Keep a check of your Google Console Pages menu item. This will indicate any issues you may have.

Search Operators are shortcuts to filter results in a search engine. Here are some of my favourites-:

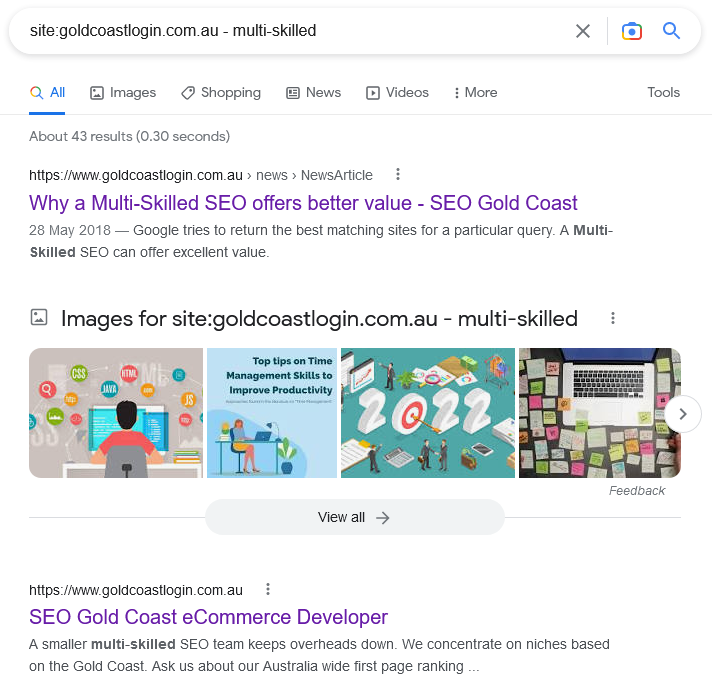

site:goldcoastlogin.com.au - multi-skilled

Limit results to those from a specific website.

This is a really efficient search operator when you add a filter - multi-skilled as we have in the above example. It is going to return the pages in ranking order on the site goldcoastlogin.com.au that feature the word multi-skilled.

As an SEO this information is useful when you want to add more content or quickly find the content Google regards as best matching this term on your site.

A full list of Search Operators is available at AHREFs Search Operators

Google publishes Search Volume figures, you can use Google Trends to discover the numbers of searches for a keyword. You may use tools to discover related keywords, these are a benefit in setting up the structure of your site. A website owner may have a certain keyword in mind. Verifying there are actually good search volumes for that keyword is important. We make web development decisions based on keyword analysis and search volumes.

You may decide to target a term with low search volumes if that particular keyword can add value to your business.

In this article Problems with keyword search volume they conclude the numbers are all wrong.

"A couple of things. First, only one person really knows and that's Google, and they're not telling the truth. It is widely believed that Google is understating the search volume of many keywords, especially long tails keywords. That's because they want to make it look like it's not worth the effort to get organic search traffic by doing content marketing targeting long-tail keywords (which is my strategy)."

Our search results correlate with this - where predicted volumes for a search are low yet the number of actual clicks on a site verified by Google Console are above the entire months predictions.

In other words Google is predicting a search volume it knows by its own data is underestimated.

Search Volumes are very useful to hone in on targets - value exists in long-tail keywords (keywords that are more specific). Check the actual results via Google Search Console and build on them.

An SEO Friendly URL has keywords in the structure as opposed to parameters like https://www.goldcoastlogin.com.au/item?News_ID=6.

An example-:

https://www.goldcoastlogin.com.au/assess-your-ecommerce-opportunities

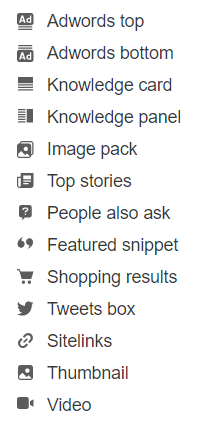

Search Engine Results Page (SERP). The days of 10 blue links are long gone - almost any keyword you put into Google will have a bunch of SERP features: sitelinks, videos, tweets, knowledge cards, etc.

A server is a computer often running a Linux operating system primarily used to display web pages. They are pretty much the same as a normal computer but are configured to serve up web pages over the Internet. The performance of a server and the configuration of sites on it are critical to performance in speed tests.

A server records every activity in logs. A visitor arrives and every file request is recorded in a special format that allows analysis (Common Log Format). We can read these logs and derive useful information. For example there are numerous suspicious requests for a page, we can find the IP address and look at the requests made. Often you will notice malicious requests attempting to hack in to your database.

Analysis of server logs also helps look at user behaviour. If your site is poorly made you will notice a whole bunch of files being loaded that are unnecessary. Many poor website structures or plugins load unused files on a page. Hence you see some sites run very slowly.

Sitelinks are added to certain search results. "We only show sitelinks for results when we think they'll be useful to the user." Looking at the above example it is a clear advantage to have the site links. These links are automated by Google. These are internal links into the site, displayed to aid the person searching.

Often site structure refers to how the site is categorised and how it relates to the menu. One of the failures of a WordPress site IMO is its lack of structure. A deep structure suits sites with long paths looking for refined search terms.

This page https://www.goldcoastlogin.com.au/gold-coast/SEO/SEO-Glossary-Of-Terms.html has a structure suited to "SEO Glossary of Terms - Gold Coast". Many WordPress sites would have a flat structure with the keywords tacked on the end.

A deep website structure enables you to create very exact and targeted topical pages on your website.

Your menu structure should reflect the importance of the categories within your site. Making a decision on a menu item is far more important than it would seem. You are in effect deciding by your menu structure which pages you will emphasise. This is not the same as changing them later because you lose valuable Google brownie points by having a good structure that Google clearly understands. When you change the name of a page it has an effect. It is best to get the naming right first. Our approach is to actually agonise on the structure, then quickly get the site live.

It is critical to ensure your site is crawled effectively by Google. A good site structure will help Google categorise your content and make good AI decisions. Sometimes you can cannibalise your own content or you may be lucky enough to have 2 pages that rank for a specific keyword.

It is recommended to keep every page within 4 clicks, rarely will we have a page that requires more than 3 clicks to get to.

In 2015, Twitter and Google made a deal that gave Google access to Twitter's full stream of tweets, known as the "Firehose". This allows tweets to be found on search engine result pages (SERPs). Refer to Twitter for SEO to use Twitter for SEO of your site.

Social signals are the engagement - likes, shares, and comments - your content receives on social media platforms like Facebook, Twitter, Instagram, and Pinterest. You may notice many Pinterest images on Google Image search. Whether Google regards Social Signals as a ranking factor is a topic of some debate. We do notice blogs with with popular social media posts do well in search results (SERPS).

Spam in SEO terms is referring to a spammy site or a spammy link. Google engineers spend vast amounts of time creating algorithms to weed out spam and spammy sites. An example of a spammy link would be a page on a forum where a person adds an entry purely to contain a link to their site in the hope of improving results in organic searches.

Another example of a spammy site is one made from copied images and cut and paste text - Google is all over sites like this and they are going nowhere fast.

SpamBrain is Google's AI detection mechanism for spammers and low-quality websites.

"Even if you are not a spammer Google can recognise low quality content"

"Google also highly discourages using techniques commonly associated with spam or low-quality content. These include manipulative keywords, cloaking, hidden text, and too many ads."

The algorithms "learn" from your website, and it improves over time. If it finds that your site is still spammy, it'll continue to punish you.

Google used this AI based spam-prevention system for the first time December 2022.

Site speed is increasingly important for higher rankings.

1. Google will crawl your pages easier

2. It naturally ranks sites higher because the user experience is better.

Beware of open source sites created by inexperienced developers

Many open source systems are penalised (in my opinion) because each page loads many plugins. These plugins come with libraries of scripts that are used for extra "cool" functionality. For example you may have a slide show on the home page - why then load scripts that run that on other pages like About Us?

Unfortunately there is a big difference in size between a custom JavaScript and one that is used for many functions. In many cases I see sites that are reloading these scripts on every page including pages that are not even using that functionality. Hence you can see sites that look great but run very slowly and do not do as well as a truly custom optimised site. This also allows web developers to look like they can create effective sites - but in fact are creating ineffective bloated slow sites from a template - and unfortunately many of these go on to claim to be expert SEO's.

Check your site speed - Note you can check from various global locations and check the size of the page plus a few other useful insights. If your site does not do well on this score talk to us for a detailed explanation.

Google has a very useful tool to analyse site speed.

Check our article Website speed claims put to the test - often sites claiming to be properly optimised are still loading scripts that aren't even needed on the page.

SSL is short for Secure Socket Layer, and it's a standard type of data encryption technology. Google announced is was factoring SSL into its search ranking algorithm. However initially it was difficult to see any real improvement when a site switched from http to https.

There were some pretty wild claims about the need for SSL on a website with no actual need for it. Some unscrupulous developers saw it as a way of gaining extra revenue.

If you use your credit card over the internet the site must have SSL encryption to be safe.

2021: Gary Illyes Google: "HTTPS ranking signal would never rearrange the search results. It is only makes a difference if two pages have the same score in the other factors."

Originally the only statistics available for a website were crude counters and products like Awstats that analysed web logs. Now there are also products like Google Analytics and Search Console that measure Google search clicks and impressions (when your site comes up in a search).

Google Analytics have some useful information. For example you may discover via Google Console a page that gets clicked on in a search. Google Analytics can also tell you how many other pages on average are viewed (Pages per session). So when creating SEO content we can see which articles drive traffic (clicks) and result in a prolonged visit (many pages are clicked).

Logs are useful and start with the IP address of the visitor and then list other information that stats packages like Awstats break up and present in graphs etc. A good SEO will use this information to look for success or new keyword opportunities. These logs can also be useful for eliminating hacking attempts because if for example an IP address is constantly going to a specific page trying to hack it you can then block that IP address (just make sure it is not a Google crawler).

Good SEO often translates to a knowledge of the server - there is never too much information. An efficient high quality web server will improve a sites speed and reduce spam attacks and up-time.

Also refer to Bounce Rate

Technical SEO refers to technical aspects related to On-Page SEO. Ensuring your site validates, does not contain duplicate content, is structure correctly, uses SSL and many recommend using Structure Data and sitemaps to explicitly define what your content is about.

Hosting and the platform used deserves a mention here because you want your site to run as efficiently as possible. We often see sites slowed down because the platform loads every script on every page. Even when using caching and other techniques the page is still actually loading unnecessary content and runs slower than it would if it did not load all the unused content.

With the advent of AI Overviews there has been a trend of Impressions rising and Clicks falling. AI Overviews count towards your Impressions in Search Console.

The reason it is called the "the great decoupling" is that traditionally your Impressions and Clicks were in lock-step. Impressions are great, you are appearing in search engines. Clicks are where you get the visitor to your site.

One study by marketing tool maker - Ahrefs noted the increase in quality of clicks from AI Overviews. They found the conversions from the AI clicks were higher.

Creating clean code is a very important factor. A site validated by W3C is regarded highly by Google. Valid code naturally increases Site Speed. Validation means that the site adheres to standards. When a new device is created, even devices we have not thought of yet, valid code means the device should display your code properly if it is compliant with standards.

How can you tell if your site is valid and has clean code?

You can look at the code by right-clicking and choosing view source and you can copy and paste that code into the W3C validator to see if the code validates to standards.

A 301 redirect tells Google that the page/URL/Document/Image now resides at this new location. If Google has to find and re-index those images again you may find your unique image is now listed higher by a copycat domain. The process of undoing that can be frustrating. Hence when moving a site to a new domain name, 301 Redirects help maintain most of the previous value of the page/URL/Document/Image.

Page not found. 404 errors are common and almost every website will pick up at least a few 404 errors over time. The main causes of a 404 error are either that a page was deleted or it was moved to a new location.

404 errors occur when spammers attempt to guess a URL. They do this in an attempt to hack in to known issues or logins. 404 errors can occur when malicious scripts alter parameters of URLs in an attempt to extract data from your database.

Most 404 errors are malicious, however some are situations where a site has been upgraded and careful attention must be taken to ensure the new site structure is correctly altered.

I have kept this glossary simple to cover terms that are most often used and beneficial for a clients to get a quick understanding of the SEO jargon. Part of my SEO techniques is to teach my clients as much as possible to help them self promote their sites.

A Gold Coast SEO and Web Developer